Release Notes for Mindbreeze InSpire

Version 23.4

Innovations and new features

Mindbreeze InSpire for Generative AI: Intelligent, secure and fact-based

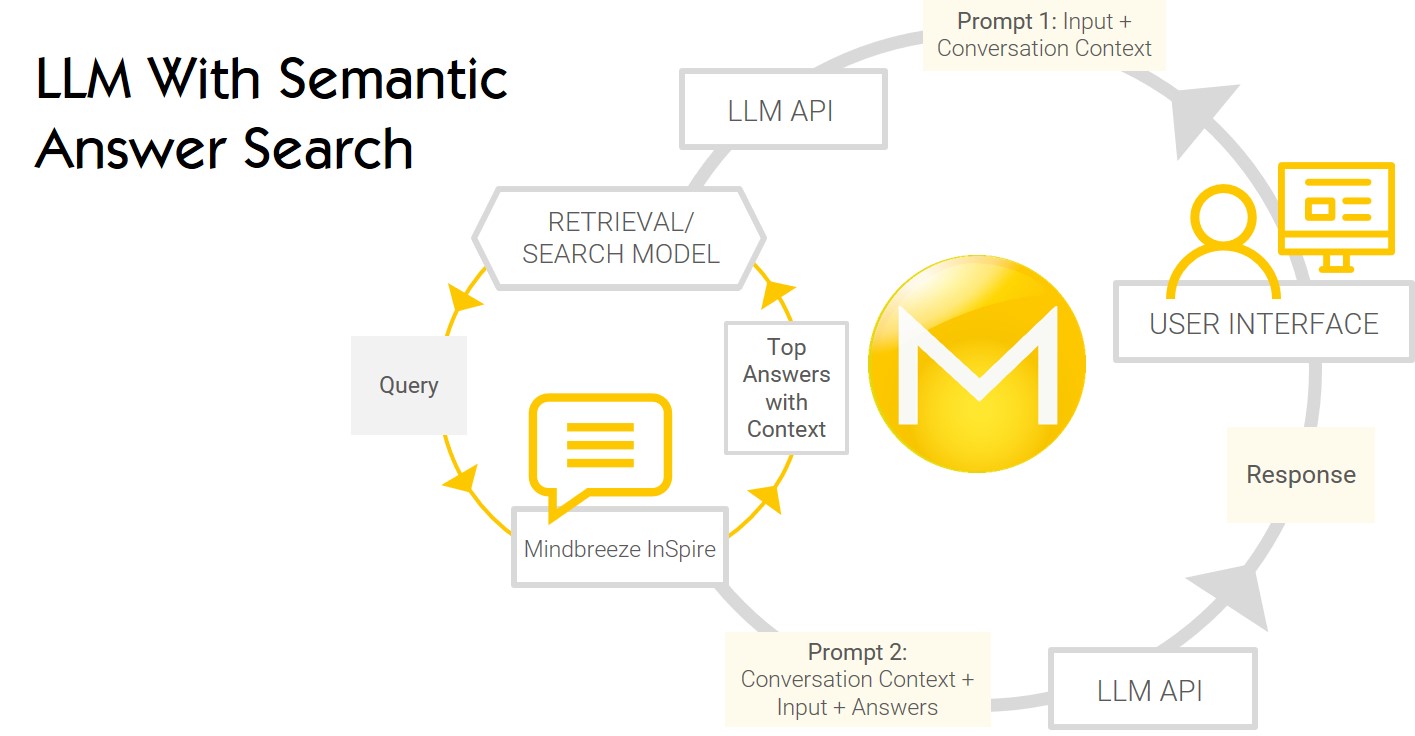

The popularity and wide range of applications of OpenAI's ChatGPT solution show how relevant the topic of Generative AI based on Large Language Models (LLMs) is. Especially in an enterprise context, this technology can offer great added value. However, issues such as hallucinations, lack of recency, data security, critical questions regarding intellectual property, and the technical implementation of sensitive data make its use difficult. Mindbreeze now offers a solution through the combination of our Insight Engine and LLMs that is able to compensate for exactly these weaknesses. The result is the ideal basis for Generative AI in an enterprise context.

Extension of semantic search - AI-based understanding of content

LLMs have exceptional capabilities in the processing and generation of human language, while Insight Engines can overcome the aforementioned hurdles through data recency, connectivity and source validation. This makes it possible to work with complete sentences and map the LLM's understanding of the content. The basis for the semantic search is made by Transformer Based Language Models in ONNX format. By using open standards, customers can integrate and use pre-trained models or self-trained LLMs in Mindbreeze InSpire.

With Mindbreeze InSpire, customers can use Generative AI immediately, as it is seamlessly integrated into the product. Data security plays a key role and is ensured through constant authorisation checks directly with the individual data sources. There, connectors guarantee that the content is always up to date. Thanks to the scalable architecture and the customisable relevance models, customers are able to personalise their interaction with the Insight Engine.

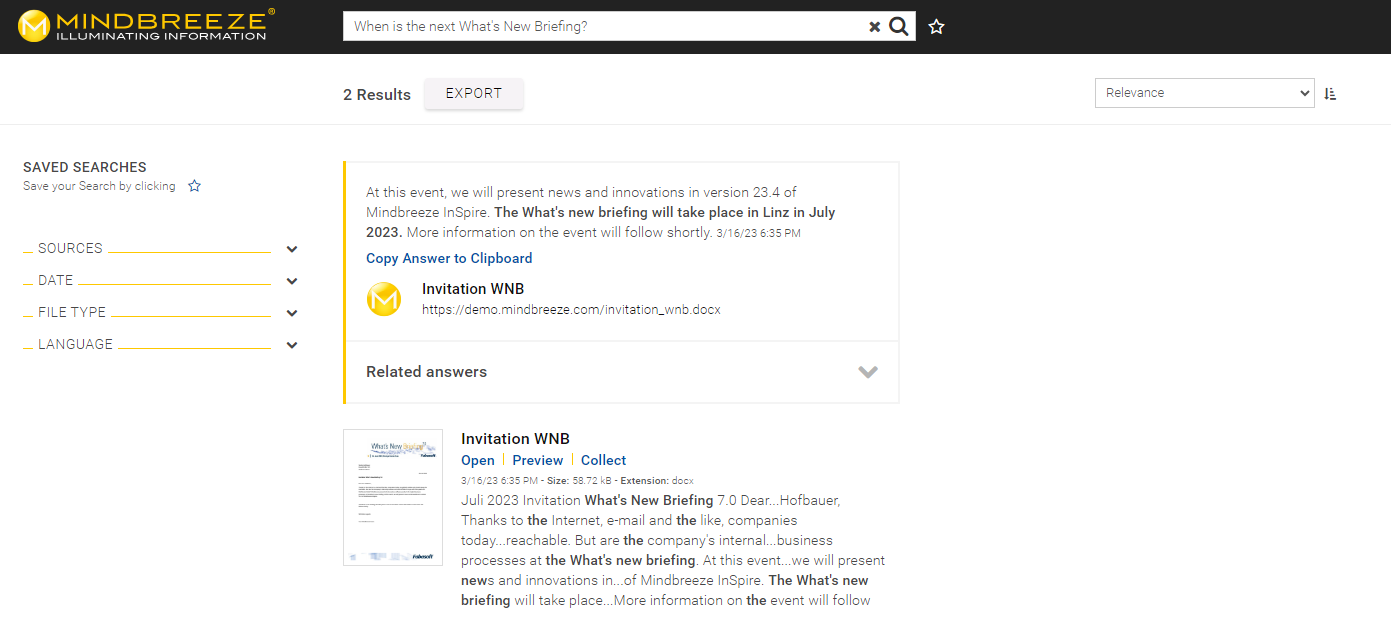

Question Answering - Mindbreeze InSpire now provides answers in context in addition to hits

Based on a deep integration of LLMs into the core of the Insight Engine and the semantic search, the Question Answering feature can now generate answers in natural language. Mindbreeze's many years of experience enable high scalability when processing large data sets. Thanks to the integration of multilingual models, Mindbreeze InSpire can generate information in different languages. For example, a user can ask a question in German and receive an answer in English. In addition to the results, users are provided with source information to ensure the traceability of the answers in addition to the rights. To provide the user with the necessary context, preceding and following sentences are shown in addition to the actual answer.

Through relevance models, the Question Answering feature can also be adapted to the needs of the user. Users are able to independently configure which parameters are used to measure the relevance of the answers and thus influence the resulting answers.

It is also possible to use Question Answering without the use of Generative AI in the standard client. If potential answers are found, the most relevant answer in the context of the hit is also displayed in the standard client.

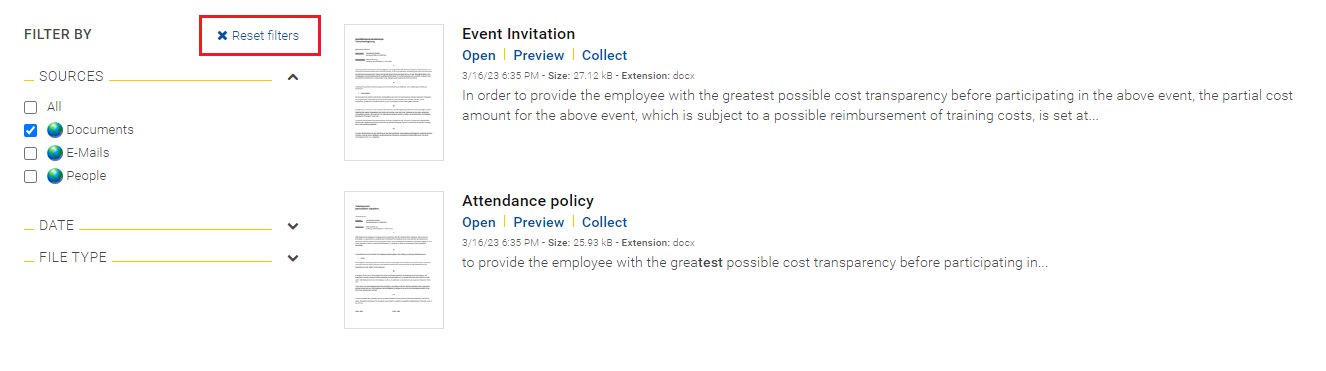

Simplified resetting of filters in the Insight App

Mindbreeze users can limit search results by using filters to obtain a more precise list of results. The filters can be configured by the Mindbreeze administrator for different metadata to offer users a better search experience and more efficient access to information.

To improve the usability of the filters, the option Reset filter has been added with the release of version 23.4. The option Reset filter appears above the available filters as soon as a filter is active (see the following screenshot) and resets all active filters with a single click.

New and extended connectors

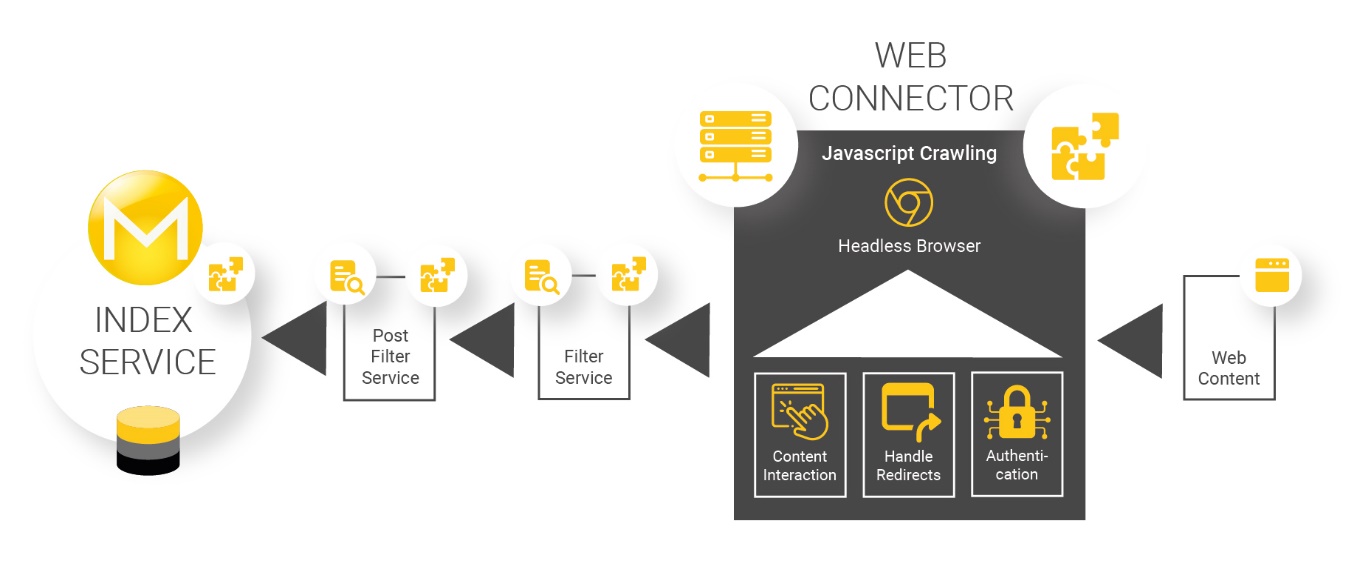

Extended configurability of the script behaviour for JavaScript crawling

The functionality of Javascript crawling enables the automatic and script-based simulation of user input when crawling complex websites. This makes it possible to crawl websites with login masks, pop-ups or delayed content. With release 23.4, different scripts can now be defined per sub-URL. This enables the crawling of complex websites which require a variety of different script behaviours using only a single crawler.

In addition, the security of scripts has been improved by requiring a host name to ensure that scripts are only executed on websites for which they are intended. Furthermore, it is now possible to access the Mindbreeze credentials from within the script, so that user names and passwords are no longer displayed in the configuration or in the logs.

Technical improvements

Support of alternative authentication possibilities via JWT tokens

The Mindbreeze Client Service supports authentication using JWT-Tokens to enable customers to access Mindbreeze InSpire externally. Access rights to documents and other media can now be defined with additional information in the JWT token. Previously, basic information about the user was stored, such as the user name or email address. It is now possible to add information such as groups (e.g. department) and roles (e.g. job description) to the JWT token.

Increased efficiency of access management in ServiceNow

Access rights management in ServiceNow has been improved in version 23.4, making it possible to manage access to documents more effectively. It is now possible to mark an employee or department as inactive to prevent access to documents and other media.

Inactive employees are not included in the Principal Resolution Cache, which reduces cache size and improves performance. The management of access rights can be done by using the Constraint Query for Users setting.

Security relevant changes

23.4.0.425

- Updated: Chromium to the most recent available version Version (CVE-2023-2721, …, CVE-2023-3421)

- Updated: Less package to version 609 or higher (CVE-2023-71442d7613) (CVE-2022-46663)

- Updated: Dell BIOS to version 2.18.1 (CVE-2023-25537) (CVE-2021-38578)

- Updated: Java OpenJDK to version 8u372

Additional changes

23.4.1.526

- Added: Possibility to globally disable the answerbox via an application option.

- Improved: Optimised performance for document authorisation and ACL precomputing.

23.4.1.517

- Added: Support for ACL precomputation when using Reference ACLs

- Fix for: Microsoft Dynamics CRM User Principals are incorrect when the user gets a role from a team in a different business unit

- Fix for: The character „?“ at different positions has no impact on the search

- Fix for: Inverter crashes in certain cases when "NGrams for Non-Whitespace Separated Tokens Zone Pattern" is configured

23.4.0.425

- Added: New action handler copy path.

- Added: Entity Recognition processing in PDF preview can be limited (e.g. in large documents) by setting the Preview Length

- Added: Handle suggest timeouts in the background as console warnings

- Added: Client Service setting to extract additional principals out of the JWT Token

- Added: Additional config options in the Web Connector to simplify the JavaScript configuration for combined usecases

- Fix for: Resources configured in MMC can be saved to a wrong path

- Fix for: Status readwrite/readonly is also set right for other modis (e.g. deletbuckets).

- Fix for: The overflow scrolling for Entity Highlighting in Preview.

- Fix for: "Precomputed acl threads" setting is ignored when configured via the Mescontrol app.

- Fix for: Talend Dataintegration Mindbreeze Components Error (java.lang.NoClassDefFoundError: javax/mail/internet/ParseException).

- Fix for: Too strict implementation of Confuence Space ACLs.

- Fix for: Microsoft SharePoint Online does not correctly process a folder rename during Full Crawl.

- Fix for: NullPointerException in PersonalizedRelevanceTransformer if log:referer is not set.

- Fix for: AWS Login did not work for files containing special character (GSA Feed Adapter Service).

- Fix for: Some style vulnerability for titles in Client apps.

- Improved: System robustness to recover from missing or corrupt config files.

- Improved: Robustness of Microsoft File Connector with respect to connection timeouts when crawling DFS shares with multiple namespace servers.

- Improved: Visual presentation of date range picker in Insight App

- Improved: Effectiveness of "User Constraint Query" setting in ServiceNow Principal Resolution Service

- Changed: Default value of "Organization Regex" setting in Microsoft SharePoint Online Principal Cache and moved the setting to increase visibility.

- Changed: PoiMsg is now the default filter for .msg files and the p7m filter is disabled in the default configuration.