Release Notes for Mindbreeze InSpire

Version 24.1

Innovations and new features

24.1.1.338

Mindbreeze InSpire AI Chat available with Large Language Models from OpenAI, Microsoft Azure OpenAI and Aleph Alpha

Mindbreeze customers have the option of linking the Mindbreeze InSpire AI Chat with Large Language Models (LLMs) that are operated either locally or via a Mindbreeze SaaS environment. With the Mindbreeze InSpire 24.1 Release Version 24.1.1.338, linking Mindbreeze InSpire AI Chat with LLMs from cloud providers OpenAI, Microsoft Azure OpenAI and Aleph Alpha is now also available. This enables the use of these comprehensively trained models in combination with the Mindbreeze InSpire AI Chat.

Linking a Large Language Model from OpenAI, Microsoft Azure OpenAI or Aleph Alpha with the Mindbreeze InSpire AI Chat is easy to configure. With the help of Retrieval Augmented Generation Administration (RAG Administration), administrators are able to easily link OpenAI and Aleph Alpha models such as GPT-3.5 Turbo and GPT-4 with Mindbreeze InSpire. The integration of an OpenAI LLM requires a valid API key or the provision of the OpenAI LLM via Microsoft Azure.

24.1.0.322

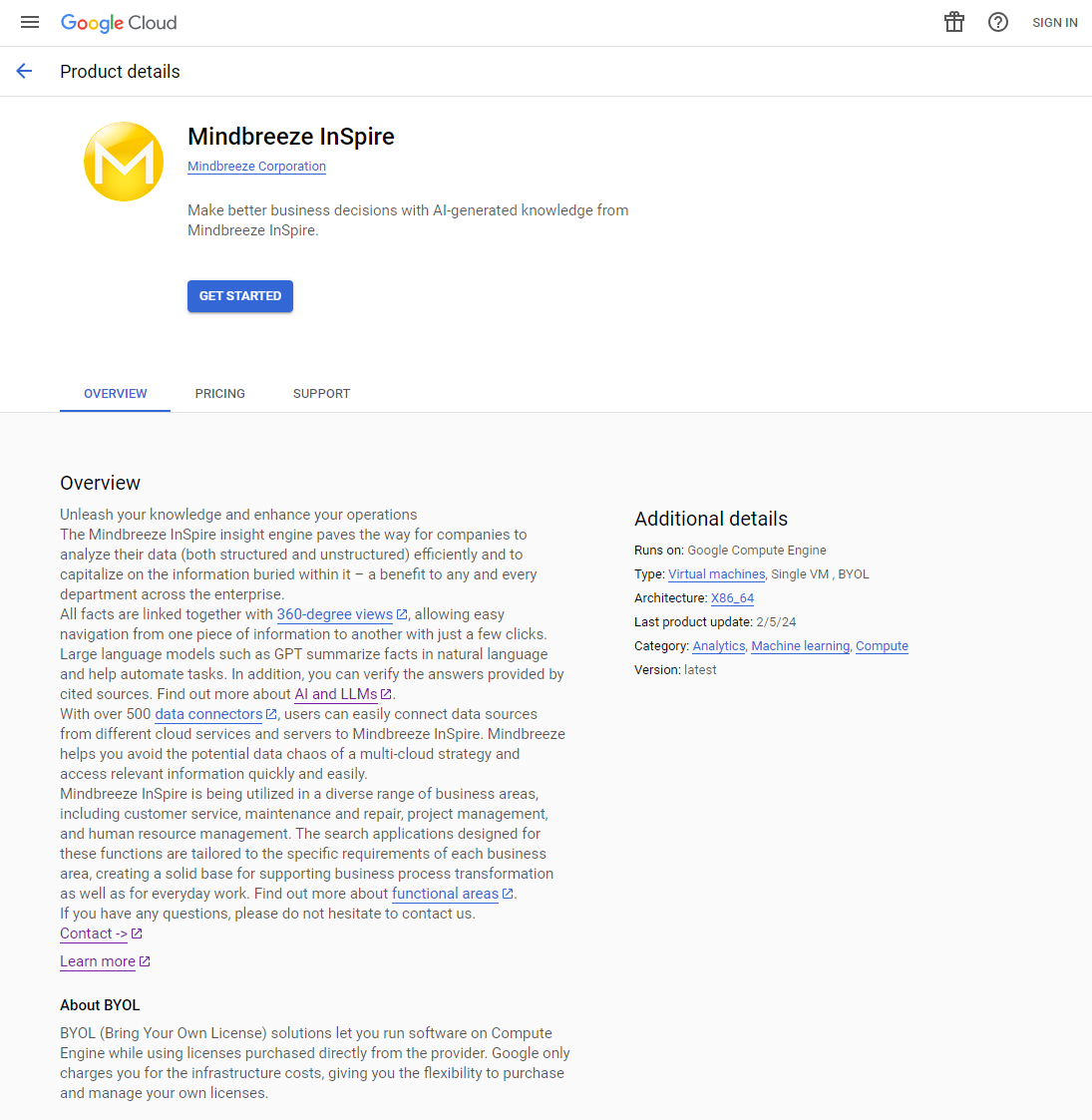

Mindbreeze InSpire also available in the Google Cloud Marketplace

Mindbreeze InSpire is now also available as a Google Cloud Image in the Google Cloud Marketplace. Mindbreeze customers can choose between the contract tiers, Mindbreeze InSpire 1M and Mindbreeze InSpire 10M. Thus, Mindbreeze InSpire can also be operated with Google Cloud as an alternative to On-Premises, SaaS, AWS and Microsoft Azure. In addition to a Google Cloud Marketplace subscription a Mindbreeze license per instance is required.

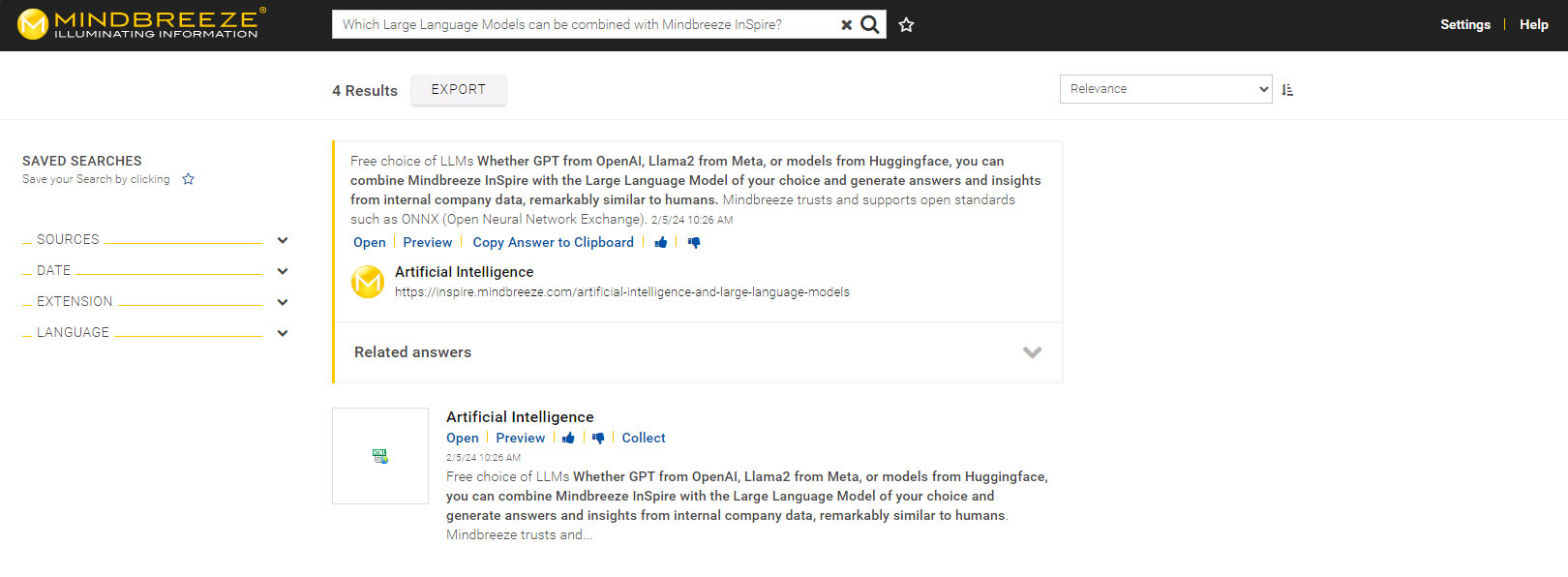

Increased comprehensibility of answers through optimised sentence segmentation

By using Large Language Models, users receive answers to their questions in complete sentences. This enables more direct and faster access to information. With the Mindbreeze InSpire 24.1 release, the LLM also considers the layout of the document when generating an answer. As displayed in the screenshot, the recognition of sentences is more precise and the comprehensibility of answers is increased.

This is made possible by optimised sentence segmentation. In addition to punctuation, the LLM now also considers certain layout information. As a result, the recognition of individual sentences is more reliable and the answers generated are more understandable.

New and advanced connectors

Customised metadata through the indexing of custom fields in Atlassian Jira

Users of Atlassian Jira have the option of creating property fields in "Issues". Mindbreeze InSpire is able to index these fields and make the metadata available as a filter and search restriction. With the Mindbreeze InSpire 24.1 release, indexing custom fields is also an option. Users are now able to narrow down the search results in the Search Client more precisely with those metadata.

For example, a user has created a custom field for a business unit with the value "Sales" in Atlassian Jira. This metadata is now available through Mindbreeze InSpire to filter the search results by the value "Sales". In addition, it is possible to control indexing by specifying the indexing of certain metadata from an issue.

Technical extensions

More comprehensive support for custom translations in the Mindbreeze InSpire Insight App Client

The addition of custom translations in the Mindbreeze InSpire Insight App Client is supported even more extensively with the Mindbreeze InSpire 24.1 release. Administrators now have the option to add translations according to the ISO 639 standard in up to 184 languages. This makes it possible to customise the Mindbreeze InSpire Insight App Client even more precisely to your own requirements.

Link to the documentation for adding custom translations

Simplified configuration of the Hierarchical CSV Enricher

Depending on the use case, it may be necessary for users to configure multiple Hierarchical CSV Enricher services, for example to generate multiple metadata. With the Mindbreeze InSpire 24.1 release, this configuration process is easier to perform. Users are now able to cover multiple use cases with a single Hierarchical CSV Enricher service. This favours faster inversion, which also speeds up the index creation process.

The simplified configuration is made possible by dynamic metadata. Users are thus able to determine the generation of the metadata in the CSV depending on the use case. This means that only the configuration of one Hierarchical CSV Enricher is required for several use cases.

Appliance platform - updating the basis for container images to AlmaLinux 8

Mindbreeze places particular importance on security, which is why it is constantly being expanded and improved. With the Mindbreeze InSpire 24.1 release, Mindbreeze is updating all container images to a distribution that is binary-compatible with RHEL8 (AlmaLinux 8). This update enables Mindbreeze to provide its customers with security updates more quickly, and to react faster to security issues. In addition, the increased performance of Mindbreeze InSpire is a further advantage for Mindbreeze customers.

Security relevant changes

24.1.1.338

- Updated: Chromium to version 122.0.6261.57 .

24.1.0.322

- Updated: Keycloak to version 23.0.4 .

- Updated: CoreOS to version 39.

- Updated: xmlsec.jar to version 2.2.6 (CVE-2023-44483).

- Updated: Apache Tomcat to version 8.5.98 (CVE-2023-42795, CVE-2023-45648, CVE-2023-46589).

- Updated: OpenJDK to version 1.8.0.402 .

- Updated: Chromium to version 121.0.6167.85 .

- Updated: Python Security.

Additional changes

24.1.2.341

Fix for: Kerberos keytabs without AES keys did not work.

24.1.1.338

- Added: Support for OpenAI and Aleph Alpha LLMs for RAG.

- Fix for: Downgrade due to unnecessary migration of the Keycloak database.

24.1.0.322

- Added: Service Configurations and Service Data is included in Backup.

- Added: The Management Center is now available in German.

- Added: Possibility to index additional metadata from Jira custom fields.

- Added: Configurable "Zone ID" in Impersonation Token settings for advanced AI Chat setups.

- Added: Option for search container component to inherit constraints from parent search.

- Added: Semantic search features (Language Detection, NER, Compound Splitting, Sentence Transformation) are available on AlmaLinux 8.

- Added: The RAG configuration is included in Snapshots and backups.

- Added: Setting in the Pipeline for always attaching the retrieved sources to the generated answer (Insight Services for RAG).

- Added: PostgresSQL 15 is available for new appliances.

- Fix for: Atlassian Confluence Sitemap Generator failed when trying to write data that contains invalid XML characters.

- Fix for: mesnode does not start if mesmaster is not installed.

- FIx for: InSpire Update fails with Docker images tagged with more than one “/”.

- Fix for: app.telemetry columns for Insight Services are not updated.

- Fix for: Inverter threads do not start again after setting index mode from “READONLY” to “READWRITE” under certain circumstances.

- Fix for: The index option "Transform Terms to Similarity" is not set to "Optional" by default.

- Fix for: Terms consisting of only stop characters are shown as "missing terms".

- QueryPerformanceTester does not correctly test the contentFetch action and does not trigger highlighting in PDF preview.

- Improved: Client Service now supports custom translations for any ISO 639 Language.

- Improved: The configuration of the Hierarchical CSV Enricher Service supports the usage of one Hierarchical CSV Enricher Service for multiple use cases.

- Improved: The custom.css provided in the plugin zip is simplified and cleaned up.

- Improved: The Dropbox Connector now supports OAuth2 Authentication.

- Improved: NLQA sentence segmentation considers layout information as well.

- Improved: User experience of switching between RAG services.

- Changed: softwaretelemetry.js is not cached (browser cache) anymore.

- Changed: NLQA is not a beta feature anymore. The restriction to 50,000 documents has been removed and an additional built-in model all-MiniLM-L6-v2 has been added.